The Refinery Model

Most people building with LLMs think they're running a software company. They're not.

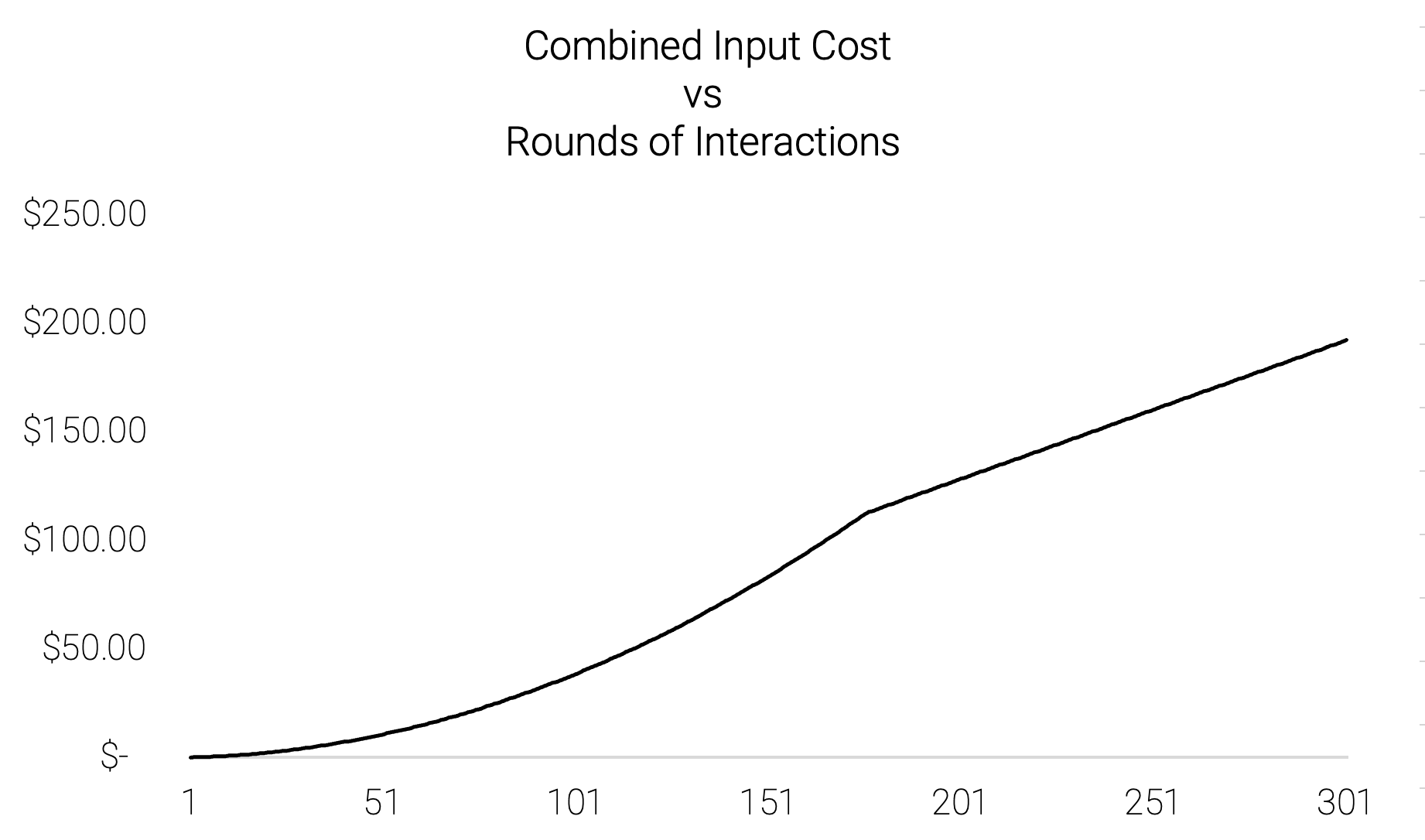

I realized this while looking at our API bills last quarter. The costs didn't behave like software costs at all. They grew in this weird nonlinear way that seemed wrong at first, then obvious, then unavoidable.

Software scales beautifully. You build it once and serve it forever. Your hundredth customer costs almost nothing to serve. Your millionth barely more. That's the entire economic foundation of SaaS. Fixed costs up front, near zero marginal costs forever. It's what made Salesforce and Atlassian possible.

LLMs break that model completely.

The Memory Tax

When you talk to an LLM, it has to remember everything you said. Not metaphorically. Literally. Every previous message gets fed back through the model with each new response.

Think about what that means. Say you're having a conversation and each turn adds roughly 1,000 tokens. After one turn, you've used 1,000 tokens. After two turns, the model rereads the first turn plus the new turn, so 2,000 more tokens. After three turns, it rereads everything again. 3,000 more tokens.

The total isn't 10,000 tokens after 10 turns. It's 55,000 tokens. The cost grows quadratically. It's literally an arithmetic sum where each turn costs more than the last because the model has to reprocess the entire conversation history.

You can write this as a simple formula. If each turn adds k tokens, then after n turns your total cost is roughly k times n times n plus one, divided by two. Which is just a fancy way of saying costs compound fast.

Now, this doesn't go on forever. Every model has a maximum context window. Claude has 200,000 tokens. GPT-4 has 128,000. Once you hit that limit, the growth stops. The oldest messages fall off and you enter a linear cost regime. So the curve isn't infinite. It's bounded. But it's still brutal for the first chunk of any conversation.

This creates a fundamentally different cost structure. Traditional software has high fixed costs and low marginal costs. LLMs have low fixed costs and high, nonlinear marginal costs. That changes everything about how you should price.

Why Seats Don't Work Anymore

SaaS companies charge per seat because it maps cleanly to cost. One Salesforce user looks roughly like another Salesforce user. Sure, some power users click more buttons, but in aggregate, the variation is small enough that you can just average it out.

LLM products can't do this. One user might send three quick messages a day asking for recipe ideas. Another might be running an automated research pipeline that burns through 10 million tokens a week. Same product. Same "seat." A hundred times different cost.

So why do we still see seat-based pricing everywhere? Because investors understand it. It makes your ARR look clean and predictable. You can show a nice graph of seats over time and talk about expansion revenue and net dollar retention. All the metrics VCs know how to evaluate.

But underneath, it's fake. Every seat-based LLM pricing plan is just prepaid tokens with better branding. You're not really selling seats. You're selling a pool of usage that you've arbitrarily divided by headcount.

The honest model looks more like a phone plan. Remember when mobile carriers switched from unlimited to tiered data? That happened because different users consumed wildly different amounts of bandwidth, and the economics stopped making sense. LLM companies are going through the same transition right now, just faster.

You're selling thinking capacity. And thinking capacity is measured in tokens, not seats.

Refineries, Not Factories

Here's the mental model that changed how I think about this.

Software companies are like car factories. You invest heavily to build the assembly line. Then you crank out cars. Each additional car costs something, but the average cost per car drops as you make more. Scale creates efficiency. This is the classic manufacturing learning curve that SaaS borrowed and optimized further by making marginal costs nearly zero.

LLM companies are refineries.

You don't manufacture the crude oil. You buy it from Anthropic or OpenAI at wholesale rates. Your job is to refine that raw compute into something more valuable. You turn generic intelligence into specific, useful cognition.

A basic query is regular unleaded. You take in a simple question, run it through the model once, and output an answer. Low cost, low value. Deep reasoning is premium. You chain together multiple model calls, you add retrieval, you verify outputs, you retry failed attempts. Higher cost, but much higher value.

You're selling octane levels. That's the business.

And your business lives in the spread. If you're paying Anthropic three dollars per million input tokens and selling that cognitive output for six dollars per million tokens worth of value, your business exists in that three dollar refinery margin. That's your entire economic moat.

This is completely different from traditional software economics. Software companies win by maximizing the ratio between fixed costs and revenue. Build once, sell infinitely. LLM companies win by maximizing the ratio between wholesale compute and retail cognition. Buy low, refine well, sell high.

The implication is that scale doesn't help you the way it helps software companies. Making more widgets doesn't reduce your per-widget cost when every widget requires fresh crude oil. You can get better at refining. You can negotiate better rates with your suppliers. You can find customers willing to pay more for higher grades. But you can't escape the underlying variable cost structure.

What Gets Measured

Once you see LLM companies as refineries instead of software companies, the right metrics become obvious.

The most important number isn't ARR. It's token margin. How much value do you create per token of compute you consume? If you're paying three cents per thousand tokens and creating ten cents of value, you have a seven cent margin. That's your business.

The second number is utilization. In a refinery, you want your equipment running at capacity. Idle refineries lose money. Same with LLM infrastructure. If you've committed to a certain amount of compute capacity, you want to use all of it. Every unused token you've paid for is pure loss.

The third number is grade mix. Not all cognitive products have the same margin. Basic queries might have thin margins. Specialized reasoning might have fat margins. You want to optimize your product mix toward higher-margin cognitive outputs, the same way refineries optimize toward higher-margin fuels.

These metrics are familiar if you've ever looked at commodities businesses or manufacturing. They're completely foreign if you've only done software. And that's the problem. Most LLM founders come from software. Most LLM investors come from software. Everyone is using the wrong mental models.

The Pricing Problem

This creates a genuine strategic problem that nobody has solved well yet.

If you price like software, you're exposed to runaway costs. A few power users can destroy your unit economics. You either have to cap usage, which makes the product feel broken, or you have to eat the cost, which kills your margins.

If you price purely on usage, you solve the cost problem but you create a demand problem. Usage-based pricing is scary to customers. They don't know what their bill will be. Finance teams hate it. Procurement processes don't know how to evaluate it. You end up competing on price instead of value because price is the only concrete number anyone can see.

The best answer right now seems to be hybrid models. You charge a base fee that covers some amount of included usage, then you meter overages. This is how Snowflake works. It's how AWS works. It's probably how LLM products will work too.

But even that's just a transition. The real endpoint is probably something more like energy markets. You're selling cognitive capacity. Some of it is baseload, predictable, priced in advance. Some of it is peak demand, variable, priced in real time. You'll have spot markets for tokens. You'll have futures contracts for compute. The infrastructure will look less like Salesforce and more like an electricity grid.

This sounds insane when you say it out loud, but it's where the economics push you. Cognitive work is becoming a commodity. Commodities need commodity market structures.

What This Means For Founders

If you're building an LLM company, you need to think like a refinery operator, not a software founder.

Understand your cost curve at the token level. Know exactly what you pay for input tokens, output tokens, different model tiers, different context lengths. Track it weekly. A 20% change in your supplier's pricing can eliminate your entire margin overnight.

Build dynamic pricing that reflects your actual costs. You can't charge the same price for a query that uses 1,000 tokens and a query that uses 100,000 tokens. Your pricing needs to map to reality or you'll end up subsidizing power users with revenue from light users, which is not a stable equilibrium.

Optimize your refinery margin. This means getting better at doing more with fewer tokens. It means building smarter prompt chains. It means caching aggressively. It means choosing the cheapest model that produces acceptable output. Every token you save flows directly to your bottom line.

Think about grade mix. Can you push customers toward higher-value use cases? Can you sunset low-margin products? Can you charge more for deep reasoning than simple queries? You want to look like a premium refinery, not a commodity processor.

What This Means For Investors

If you're investing in LLM companies, you need to ask different questions.

Don't ask what the ARR multiple is. Ask what the token margin is. A company doing $10 million in revenue with a 10% token margin is worth less than a company doing $5 million in revenue with a 50% token margin. The first company has a commodity business. The second company has a real moat.

Don't ask about sales efficiency. Ask about utilization rates. Is this company efficiently using its compute commitments? Or are they paying for capacity they're not selling?

Don't ask about net dollar retention. Ask about grade mix trends. Are customers moving toward higher-margin cognitive products over time? Or are they commoditizing downward?

And most importantly, ask about supplier concentration. If a company is 100% dependent on OpenAI or Anthropic, and those providers can change pricing or cut them off at any time, that's existential risk. The best LLM companies will be those that can switch between suppliers, or better yet, run their own models for at least some workloads.

The Next Five Years

Here's what I think happens.

LLM costs keep dropping. The big labs are in a race to the bottom on per-token pricing. That's good for users but terrible for LLM wrapper companies that compete on price. Refinery margins compress.

The winners will be companies that move up the value chain. They'll stop selling raw cognition and start selling refined cognitive products. Not "access to an LLM," but "automated research" or "compliance checking" or "code review." Products where the value is obvious and the token count is hidden.

Usage-based pricing becomes standard. Seats die off as a primary metric. Everyone moves to some version of prepaid credits or metered billing. The UX gets better. Customers learn to think in terms of cognitive budgets the same way they think about cloud budgets now.

And most importantly, the market stratifies. There will be commodity LLM companies with thin margins competing on price and volume. And there will be specialized LLM companies with fat margins competing on value and quality. Like any mature refinery market.

The question every founder should be asking is: which kind of company are you building? Because the playbooks are completely different.

If you're building commodity, you need massive scale, operational excellence, and brutal cost discipline. Think Costco.

If you're building premium, you need deep expertise, strong differentiation, and customers who value quality over price. Think LVMH.

Both can work. But you have to know which game you're playing.